[OpenShift 오픈시프트] RHOCP 4.14.15 Installation - 9. Cluster 설정

RHOCP 4.14.15 설치 (오픈시프트 설치) - 클러스터 설정

Test Environment

Red Hat Enterprise Linux 9.2 (Plow)

1. Cluster 기본 설정

1.1. 자동완성 기능 활성화

openshift-install 명령어 자동완성 기능을 활성화하기 위해 다음 명령어를 실행한다.

openshift-install completion bash > /etc/bash_completion.d/openshift-install

세션을 다시 실행하거나 현재 세션에서 바로 반영하기 위해 bash 명령어를 입력한다.

tab을 누르면 자동완성이 되는 것을 확인할 수 있다.

1

2

[root@bastion ~]# openshift-install

agent analyze completion coreos create destroy explain gather graph help migrate version wait-for

openshift-install wait-for install-complete --dir=/var/www/html/ocp --log-level=debug

ocp cluster의 API를 수신할 수 있는지 확인함으로써 클러스터 동작이 완료되었다는 것을 알 수 있다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

DEBUG OpenShift Installer 4.14.15

DEBUG Built from commit 6ee6900a509dfb4a3fd568f741e163655bd3f45c

DEBUG Loading Install Config...

DEBUG Loading SSH Key...

DEBUG Loading Base Domain...

DEBUG Loading Platform...

DEBUG Loading Cluster Name...

DEBUG Loading Base Domain...

DEBUG Loading Platform...

DEBUG Loading Networking...

DEBUG Loading Platform...

DEBUG Loading Pull Secret...

DEBUG Loading Platform...

DEBUG Using Install Config loaded from state file

DEBUG Loading Agent Config...

INFO Waiting up to 40m0s (until 3:26PM KST) for the cluster at https://api.openshift.ig.local:6443 to initialize...

DEBUG Still waiting for the cluster to initialize: Cluster operators authentication, console are not available

1.2. KUBECONFIG 환경변수 설정 추가

Bastion 노드에서 KUBECONFIG 설정을 추가한다.

vi ~/.bash_profile

1

export KUBECONFIG=/var/www/html/ocp/auth/kubeconfig

설정한 내용을 적용한다.

source ~/.bash_profile

이제 oc get co 명령어를 실행했을 때 AVAILABLE 열이 모두 true가 되어야 모든 세팅이 완료된다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

authentication 4.14.15 False False True 3h3m OAuthServerRouteEndpointAccessibleControllerAvailable: Ghttps://oauth-openshift.apps.openshift.ig.local/healthz": EOF

baremetal 4.14.15 True False False 3h2m

cloud-controller-manager 4.14.15 True False False 3h6m

cloud-credential 4.14.15 True False False 3h45m

cluster-autoscaler 4.14.15 True False False 3h2m

config-operator 4.14.15 True False False 3h4m

console 4.14.15 False True False 177m DeploymentAvailable: 0 replicas available for console dement...

control-plane-machine-set 4.14.15 True False False 3h2m

csi-snapshot-controller 4.14.15 True False False 3h3m

dns 4.14.15 True False False 3h2m

etcd 4.14.15 True False False 3h1m

image-registry 4.14.15 True False False 177m

ingress 4.14.15 True False True 12m The "default" ingress controller reports Degraded=True: adedConditions: One or more other status conditions indicate a degraded state: CanaryChecksSucceeding=False (CanaryChecksRepetitiveFailures: Canary route ks for the default ingress controller are failing)

insights 4.14.15 True False False 177m

kube-apiserver 4.14.15 True False False 3h

kube-controller-manager 4.14.15 True False False 3h

kube-scheduler 4.14.15 True False False 179m

kube-storage-version-migrator 4.14.15 True False False 3h3m

machine-api 4.14.15 True False False 3h2m

machine-approver 4.14.15 True False False 3h3m

machine-config 4.14.15 True False False 3h2m

marketplace 4.14.15 True False False 3h2m

monitoring 4.14.15 True False False 10m

network 4.14.15 True False False 3h4m

node-tuning 4.14.15 True False False 3h3m

openshift-apiserver 4.14.15 True False False 173m

openshift-controller-manager 4.14.15 True False False 177m

openshift-samples 4.14.15 True False False 177m

operator-lifecycle-manager 4.14.15 True False False 3h3m

operator-lifecycle-manager-catalog 4.14.15 True False False 3h3m

operator-lifecycle-manager-packageserver 4.14.15 True False False 177m

service-ca 4.14.15 True False False 3h3m

storage 4.14.15 True False False 3h3m

1.3. 이미지 레지스트리 생성

나중에 스토리지를 Empty Directory 대신 클라우드의 리소스를 사용하기 위해서는 아래 내용 수정이 필요하다.

Empty Directory에 이미지 레지스트리 스토리지를 설정한다.

oc patch [configs.imageregistry.operator.openshift.io](http://configs.imageregistry.operator.openshift.io/) cluster --type merge \ --patch '{"spec":{"storage":{"emptyDir":{}}}}'

1

config.imageregistry.operator.openshift.io/cluster patched

ManagementState를 Removed에서 Managed로 전환하도록 이미지 레지스트리 Operator값을 수정한다.

oc patch [configs.imageregistry.operator.openshift.io](http://configs.imageregistry.operator.openshift.io/) cluster --type merge \ --patch '{"spec":{"managementState": "Managed"}}'

1

config.imageregistry.operator.openshift.io/cluster patched

1.4. Operator Hub Sources 비활성화

Disconnected 환경일 때 Bastion 노드에서 진행하면 된다.

Openshift 설치할 때 기본적으로 Operator Hub가 구성되므로, 그 전에 기본 Catalog를 비활성화 해야한다.

oc patch OperatorHub cluster --type json \ -p '[{"op": "add", "path": "/spec/disableAllDefaultSources", "value":true}]'

1

operatorhub.config.openshift.io/cluster patched

1.5. 노드 Label 변경

Infra 노드의 레이블을 변경한다.

oc label node infra01.openshift.ig.local node-role.kubernetes.io/infra=""

1

node/infra01.openshift.ig.local labeled

oc label node infra02.openshift.ig.local node-role.kubernetes.io/infra=""

1

node/infra02.openshift.ig.local labeled

oc get nodes

Infra 노드에 infra 레이블이 추가된 것을 확인할 수 있다.

1

2

3

4

5

6

7

8

9

NAME STATUS ROLES AGE VERSION

infra01.openshift.ig.local Ready infra,worker 82m v1.27.10+c79e5e2

infra02.openshift.ig.local Ready infra,worker 82m v1.27.10+c79e5e2

master01.openshift.ig.local Ready control-plane,master 4h20m v1.27.10+c79e5e2

master02.openshift.ig.local Ready control-plane,master 4h20m v1.27.10+c79e5e2

master03.openshift.ig.local Ready control-plane,master 4h20m v1.27.10+c79e5e2

worker01.openshift.ig.local Ready worker 82m v1.27.10+c79e5e2

worker02.openshift.ig.local Ready worker 82m v1.27.10+c79e5e2

worker03.openshift.ig.local Ready worker 82m v1.27.10+c79e5e2

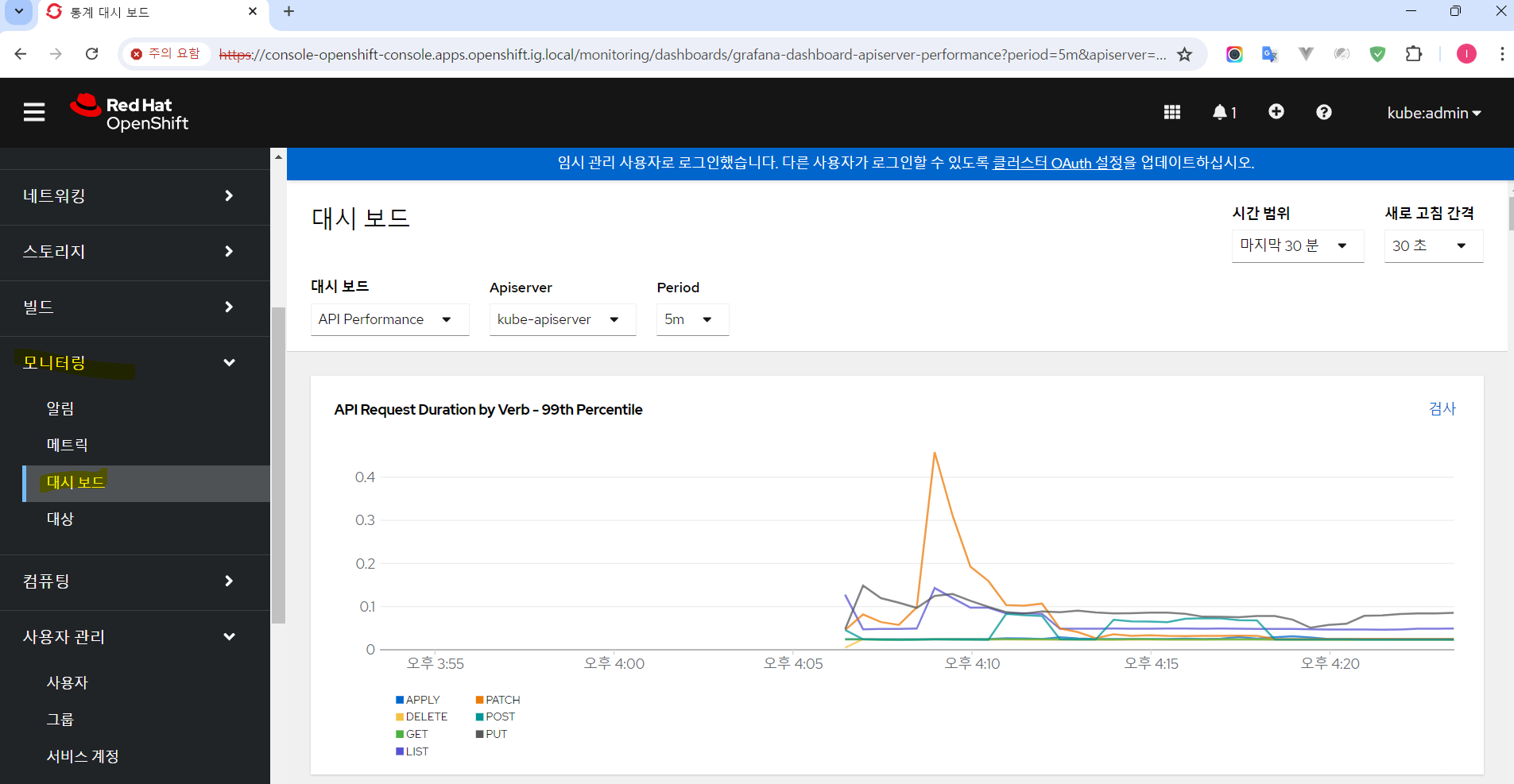

1.6. 노드 재배치 (로깅/모니터링)

default로 Worker에 배치되어있는 리소스를 nodeSelector 옵션을 사용하여 Infra 노드에 재배치한다.

mkdir /root/openshift/yaml && cd $_

vi cluster-monitoring-configmap.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

apiVersion: v1

kind: ConfigMap

metadata:

name: cluster-monitoring-config

namespace: openshift-monitoring

data:

config.yaml: |+

alertmanagerMain:

nodeSelector:

node-role.kubernetes.io/infra: ""

prometheusK8s:

nodeSelector:

node-role.kubernetes.io/infra: ""

prometheusOperator:

nodeSelector:

node-role.kubernetes.io/infra: ""

k8sPrometheusAdapter:

nodeSelector:

node-role.kubernetes.io/infra: ""

kubeStateMetrics:

nodeSelector:

node-role.kubernetes.io/infra: ""

telemeterClient:

nodeSelector:

node-role.kubernetes.io/infra: ""

openshiftStateMetrics:

nodeSelector:

node-role.kubernetes.io/infra: ""

thanosQuerier:

nodeSelector:

node-role.kubernetes.io/infra: ""

node-exporter는 모든 노드에 1개씩 배치되어야 한다.

oc get pods -n openshift-monitoring -o wide

아래 쪽에 로깅/모니터링 관련 pod들이 여러 노드에 분산되어 있는 것을 볼 수 있다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-main-0 6/6 Running 0 80m 10.131.0.12 infra01.openshift.ig.local <none> <none>

alertmanager-main-1 6/6 Running 0 80m 10.130.2.11 worker01.openshift.ig.local <none> <none>

cluster-monitoring-operator-cc696f545-tjpwh 1/1 Running 0 4h16m 10.128.0.23 master03.openshift.ig.local <none> <none>

kube-state-metrics-76cd4bdf98-n8wj5 3/3 Running 0 81m 10.130.2.8 worker01.openshift.ig.local <none> <none>

monitoring-plugin-5678f6d669-6w6p6 1/1 Running 0 81m 10.128.2.7 infra02.openshift.ig.local <none> <none>

monitoring-plugin-5678f6d669-crmcw 1/1 Running 0 81m 10.131.0.7 infra01.openshift.ig.local <none> <none>

node-exporter-ct7nr 2/2 Running 0 81m 172.16.0.176 worker01.openshift.ig.local <none> <none>

node-exporter-d27gt 2/2 Running 0 81m 172.16.0.179 infra01.openshift.ig.local <none> <none>

node-exporter-jlkv9 2/2 Running 0 81m 172.16.0.173 master01.openshift.ig.local <none> <none>

node-exporter-nw6b7 2/2 Running 0 81m 172.16.0.175 master03.openshift.ig.local <none> <none>

node-exporter-pn49x 2/2 Running 0 81m 172.16.0.177 worker02.openshift.ig.local <none> <none>

node-exporter-rtfj2 2/2 Running 0 81m 172.16.0.178 worker03.openshift.ig.local <none> <none>

node-exporter-rz6m7 2/2 Running 0 81m 172.16.0.180 infra02.openshift.ig.local <none> <none>

node-exporter-zs7tr 2/2 Running 0 81m 172.16.0.174 master02.openshift.ig.local <none> <none>

openshift-state-metrics-86bc944564-z4lqc 3/3 Running 0 81m 10.128.2.8 infra02.openshift.ig.local <none> <none>

prometheus-adapter-c4c647dd4-h5kkr 1/1 Running 0 81m 10.131.0.8 infra01.openshift.ig.local <none> <none>

prometheus-adapter-c4c647dd4-qlcb5 1/1 Running 0 81m 10.128.2.9 infra02.openshift.ig.local <none> <none>

prometheus-k8s-0 6/6 Running 0 80m 10.130.2.12 worker01.openshift.ig.local <none> <none>

prometheus-k8s-1 6/6 Running 0 80m 10.128.2.12 infra02.openshift.ig.local <none> <none>

prometheus-operator-5f6976c9df-g5zbq 2/2 Running 0 81m 10.129.0.49 master01.openshift.ig.local <none> <none>

prometheus-operator-admission-webhook-6d4d598cb5-bzcqv 1/1 Running 0 4h12m 10.129.2.6 worker03.openshift.ig.local <none> <none>

prometheus-operator-admission-webhook-6d4d598cb5-rkj5g 1/1 Running 0 4h12m 10.130.2.7 worker01.openshift.ig.local <none> <none>

telemeter-client-594846bd56-fndwk 3/3 Running 0 80m 10.131.0.11 infra01.openshift.ig.local <none> <none>

thanos-querier-67d4f469cb-94hj6 6/6 Running 0 81m 10.128.2.10 infra02.openshift.ig.local <none> <none>

thanos-querier-67d4f469cb-xgn5d 6/6 Running 0 81m 10.131.0.10 infra01.openshift.ig.local <none> <none>

이를 infra 노드에만 배치되도록 변경한다.

oc create -f cluster-monitoring-configmap.yaml

1

configmap/cluster-monitoring-config created

watch "oc get pod -n openshift-monitoring -o wide"

실시간으로 worker에 배치되어있던 로깅/모니터링 pod들이 Terminating되고 infra로 옮겨져 새로 생성이 되는 것을 확인할 수 있다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-main-0 5/6 Running 0 28s 10.131.0.22 infra01.openshift.ig.local <none> <none>

alertmanager-main-1 6/6 Running 0 60s 10.128.2.15 infra02.openshift.ig.local <none> <none>

cluster-monitoring-operator-cc696f545-tjpwh 1/1 Running 0 4h19m 10.128.0.23 master03.openshift.ig.local <none> <none>

kube-state-metrics-9c56578f7-gjkd5 3/3 Running 0 63s 10.131.0.16 infra01.openshift.ig.local <none> <none>

monitoring-plugin-5678f6d669-6w6p6 1/1 Running 0 84m 10.128.2.7 infra02.openshift.ig.local <none> <none>

monitoring-plugin-5678f6d669-crmcw 1/1 Running 0 84m 10.131.0.7 infra01.openshift.ig.local <none> <none>

node-exporter-ct7nr 2/2 Running 0 84m 172.16.0.176 worker01.openshift.ig.local <none> <none>

node-exporter-d27gt 2/2 Running 0 84m 172.16.0.179 infra01.openshift.ig.local <none> <none>

node-exporter-jlkv9 2/2 Running 0 84m 172.16.0.173 master01.openshift.ig.local <none> <none>

node-exporter-nw6b7 2/2 Running 0 84m 172.16.0.175 master03.openshift.ig.local <none> <none>

node-exporter-pn49x 2/2 Running 0 84m 172.16.0.177 worker02.openshift.ig.local <none> <none>

node-exporter-rtfj2 2/2 Running 0 84m 172.16.0.178 worker03.openshift.ig.local <none> <none>

node-exporter-rz6m7 2/2 Running 0 84m 172.16.0.180 infra02.openshift.ig.local <none> <none>

node-exporter-zs7tr 2/2 Running 0 84m 172.16.0.174 master02.openshift.ig.local <none> <none>

openshift-state-metrics-f454d74b9-cg9jb 3/3 Running 0 63s 10.131.0.17 infra01.openshift.ig.local <none> <none>

prometheus-adapter-f975b94dc-4c7jn 1/1 Running 0 62s 10.131.0.20 infra01.openshift.ig.local <none> <none>

prometheus-adapter-f975b94dc-5wkgc 1/1 Running 0 63s 10.128.2.14 infra02.openshift.ig.local <none> <none>

prometheus-k8s-0 6/6 Running 0 42s 10.131.0.21 infra01.openshift.ig.local <none> <none>

prometheus-k8s-1 6/6 Running 0 59s 10.128.2.16 infra02.openshift.ig.local <none> <none>

prometheus-operator-5786f85b44-vkfpw 2/2 Running 0 73s 10.131.0.15 infra01.openshift.ig.local <none> <none>

prometheus-operator-admission-webhook-58dcd458fb-rn65m 1/1 Running 0 76s 10.131.0.14 infra01.openshift.ig.local <none> <none>

prometheus-operator-admission-webhook-58dcd458fb-wf5wq 1/1 Running 0 76s 10.128.2.13 infra02.openshift.ig.local <none> <none>

telemeter-client-86bb7bb99d-zwnlk 3/3 Running 0 63s 10.131.0.18 infra01.openshift.ig.local <none> <none>

thanos-querier-7554f5c5cf-dr46h 6/6 Running 0 62s 10.131.0.19 infra01.openshift.ig.local <none> <none>

thanos-querier-7554f5c5cf-wn88c 6/6 Running 0 63s 10.128.2.17 infra02.openshift.ig.local <none> <none>

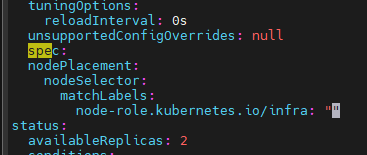

1.7. 노드 재배치 (Ingress Controller)

Ingress Controller 를 Infra 노드에만 배치되게 변경해야 한다.

oc edit ingresscontroller default -n openshift-ingress-operator -o yaml

파일을 열어서 spec 부분의 nodePlacement 행을 추가 작성하고 저장한다. (아래 1~5번째 라인 추가)

1

2

3

4

5

6

7

8

spec:

nodePlacement:

nodeSelector:

matchLabels:

node-role.kubernetes.io/infra: ""

status:

availableReplicas: 2

..SKIP..

worker노드에 떠있던게 infra로 옮겨가는 걸 볼 수 있다.

1

2

3

4

5

6

Every 2.0s: oc get pod -n openshift-ingress -o wide bastion.openshift.ig.local: Fri Jul 19 16:10:38 2024

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

router-default-7f495f9556-jk9sn 1/1 Running 0 13s 172.16.0.179 infra01.openshift.ig.local <none> <none>

router-default-7f495f9556-wmzwn 1/1 Running 0 49s 172.16.0.180 infra02.openshift.ig.local <none> <none>

router-default-d87d8658f-btfp2 1/1 Terminating 0 4h19m 172.16.0.178 worker03.openshift.ig.local <none> <none>

watch "oc get pod -n openshift-ingress -o wide"

실시간으로 worker 노드에 배치되어있던 router라는 이름의 pod들이 Terminating되고 최종적으로 infra 노드에 새로 생성이 되는 것을 볼 수 있다.

1

2

3

4

5

Every 2.0s: oc get pod -n openshift-ingress -o wide bastion.openshift.ig.local: Fri Jul 19 16:11:13 2024

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

router-default-7f495f9556-jk9sn 1/1 Running 0 48s 172.16.0.179 infra01.openshift.ig.local <none> <none>

router-default-7f495f9556-wmzwn 1/1 Running 0 84s 172.16.0.180 infra02.openshift.ig.local <none> <none>

watch "oc get co"

authentication은 바로 true로 바뀐 상태이다.

console은 아직 false 상태인데 이게 true로 바뀌면 완료되었다고 볼 수 있다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

authentication 4.14.15 True False False 94s

baremetal 4.14.15 True False False 4h21m

cloud-controller-manager 4.14.15 True False False 4h25m

cloud-credential 4.14.15 True False False 5h3m

cluster-autoscaler 4.14.15 True False False 4h21m

config-operator 4.14.15 True False False 4h22m

console 4.14.15 False True False 4h15m DeploymentAvailable: 0 replicas available for console deployment...

control-plane-machine-set 4.14.15 True False False 4h21m

csi-snapshot-controller 4.14.15 True False False 4h21m

dns 4.14.15 True False False 4h20m

etcd 4.14.15 True False False 4h20m

image-registry 4.14.15 True False False 12m

ingress 4.14.15 True False False 90m

insights 4.14.15 True False False 4h15m

kube-apiserver 4.14.15 True False False 4h18m

kube-controller-manager 4.14.15 True False False 4h18m

kube-scheduler 4.14.15 True False False 4h18m

kube-storage-version-migrator 4.14.15 True False False 4h21m

machine-api 4.14.15 True False False 4h20m

machine-approver 4.14.15 True False False 4h21m

machine-config 4.14.15 True False False 4h20m

marketplace 4.14.15 True False False 4h21m

monitoring 4.14.15 True False False 88m

network 4.14.15 True False False 4h22m

node-tuning 4.14.15 True False False 4h21m

openshift-apiserver 4.14.15 True False False 4h11m

openshift-controller-manager 4.14.15 True False False 4h15m

openshift-samples 4.14.15 True False False 4h15m

operator-lifecycle-manager 4.14.15 True False False 4h21m

operator-lifecycle-manager-catalog 4.14.15 True False False 4h21m

operator-lifecycle-manager-packageserver 4.14.15 True False False 4h16m

service-ca 4.14.15 True False False 4h21m

storage 4.14.15 True False False 4h22m

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

authentication 4.14.15 True False False 2m52s

baremetal 4.14.15 True False False 4h22m

cloud-controller-manager 4.14.15 True False False 4h26m

cloud-credential 4.14.15 True False False 5h4m

cluster-autoscaler 4.14.15 True False False 4h22m

config-operator 4.14.15 True False False 4h23m

console 4.14.15 True True False 16s SyncLoopRefreshProgressing: Working toward version 4.14.15, 1 replicas available

control-plane-machine-set 4.14.15 True False False 4h22m

csi-snapshot-controller 4.14.15 True False False 4h22m

dns 4.14.15 True False False 4h22m

etcd 4.14.15 True False False 4h21m

image-registry 4.14.15 True False False 13m

ingress 4.14.15 True False False 91m

insights 4.14.15 True False False 4h16m

kube-apiserver 4.14.15 True False False 4h19m

kube-controller-manager 4.14.15 True False False 4h19m

kube-scheduler 4.14.15 True False False 4h19m

kube-storage-version-migrator 4.14.15 True False False 4h23m

machine-api 4.14.15 True False False 4h22m

machine-approver 4.14.15 True False False 4h23m

machine-config 4.14.15 True False False 4h22m

marketplace 4.14.15 True False False 4h22m

monitoring 4.14.15 True False False 89m

network 4.14.15 True False False 4h23m

node-tuning 4.14.15 True False False 4h22m

openshift-apiserver 4.14.15 True False False 4h12m

openshift-controller-manager 4.14.15 True False False 4h16m

openshift-samples 4.14.15 True False False 4h16m

operator-lifecycle-manager 4.14.15 True False False 4h22m

operator-lifecycle-manager-catalog 4.14.15 True False False 4h22m

operator-lifecycle-manager-packageserver 4.14.15 True False False 4h17m

service-ca 4.14.15 True False False 4h23m

storage 4.14.15 True False False 4h23m

watch "oc get pods -n openshift-console"

1

2

3

4

5

6

7

NAME READY STATUS RESTARTS AGE

console-5b748fbcfc-r29rm 1/1 Running 18 (6m26s ago) 92m

console-7ddb7fdb7d-sht6n 0/1 CrashLoopBackOff 17 (4m36s ago) 90m

console-7ddb7fdb7d-tvd5h 0/1 CrashLoopBackOff 17 (4m42s ago) 90m

downloads-96886cc64-h4mxc 1/1 Running 0 4h17m

downloads-96886cc64-z7nk4 1/1 Running 0 4h17m

1

2

3

4

5

6

NAME READY STATUS RESTARTS AGE

console-5b748fbcfc-r29rm 1/1 Terminating 18 (7m12s ago) 93m

console-7ddb7fdb7d-sht6n 1/1 Running 18 (5m22s ago) 91m

console-7ddb7fdb7d-tvd5h 1/1 Running 18 (5m28s ago) 91m

downloads-96886cc64-h4mxc 1/1 Running 0 4h18m

downloads-96886cc64-z7nk4 1/1 Running 0 4h18m

1

2

3

4

5

NAME READY STATUS RESTARTS AGE

console-7ddb7fdb7d-sht6n 1/1 Running 18 (5m35s ago) 91m

console-7ddb7fdb7d-tvd5h 1/1 Running 18 (5m41s ago) 91m

downloads-96886cc64-h4mxc 1/1 Running 0 4h18m

downloads-96886cc64-z7nk4 1/1 Running 0 4h18m

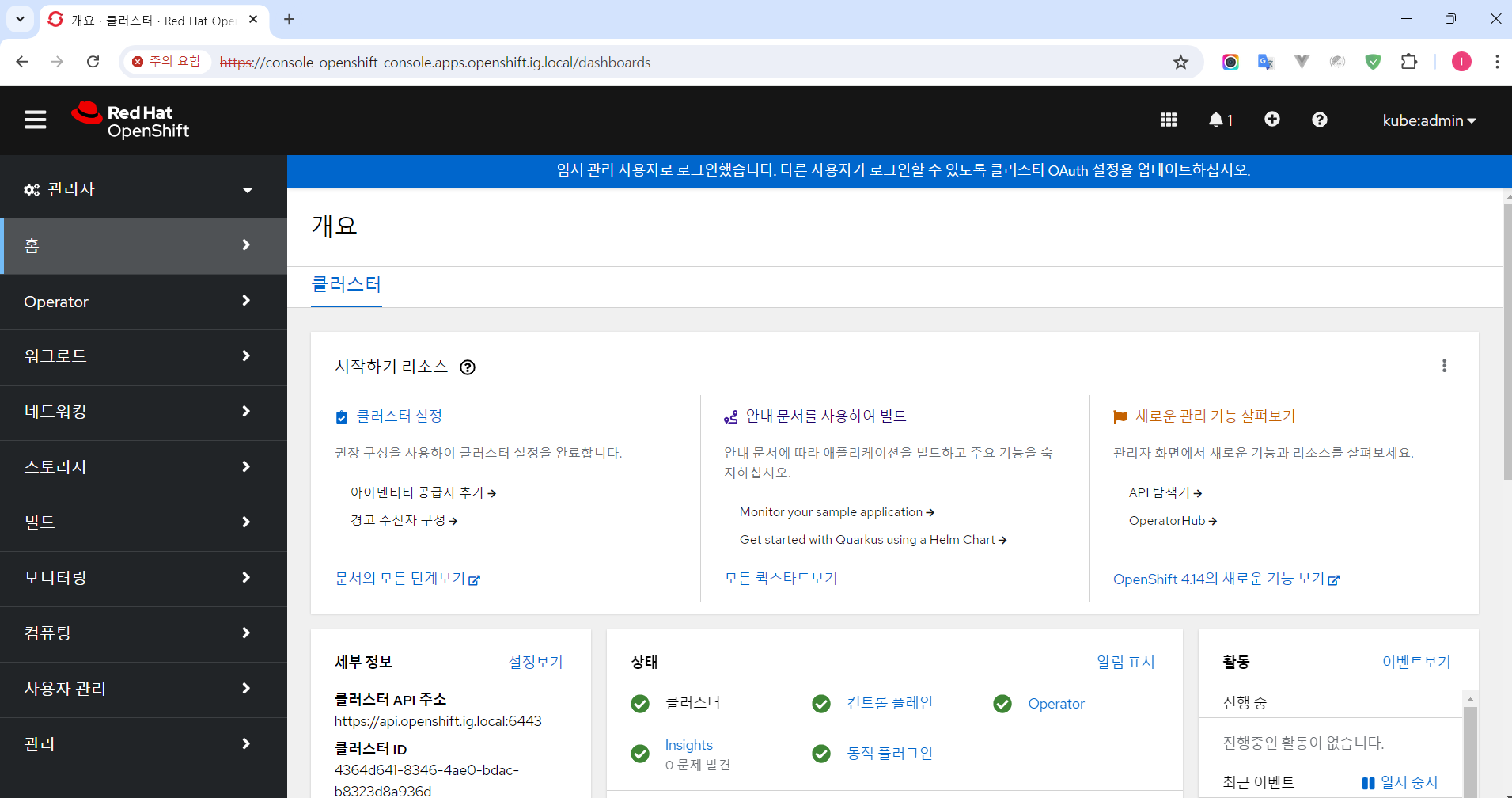

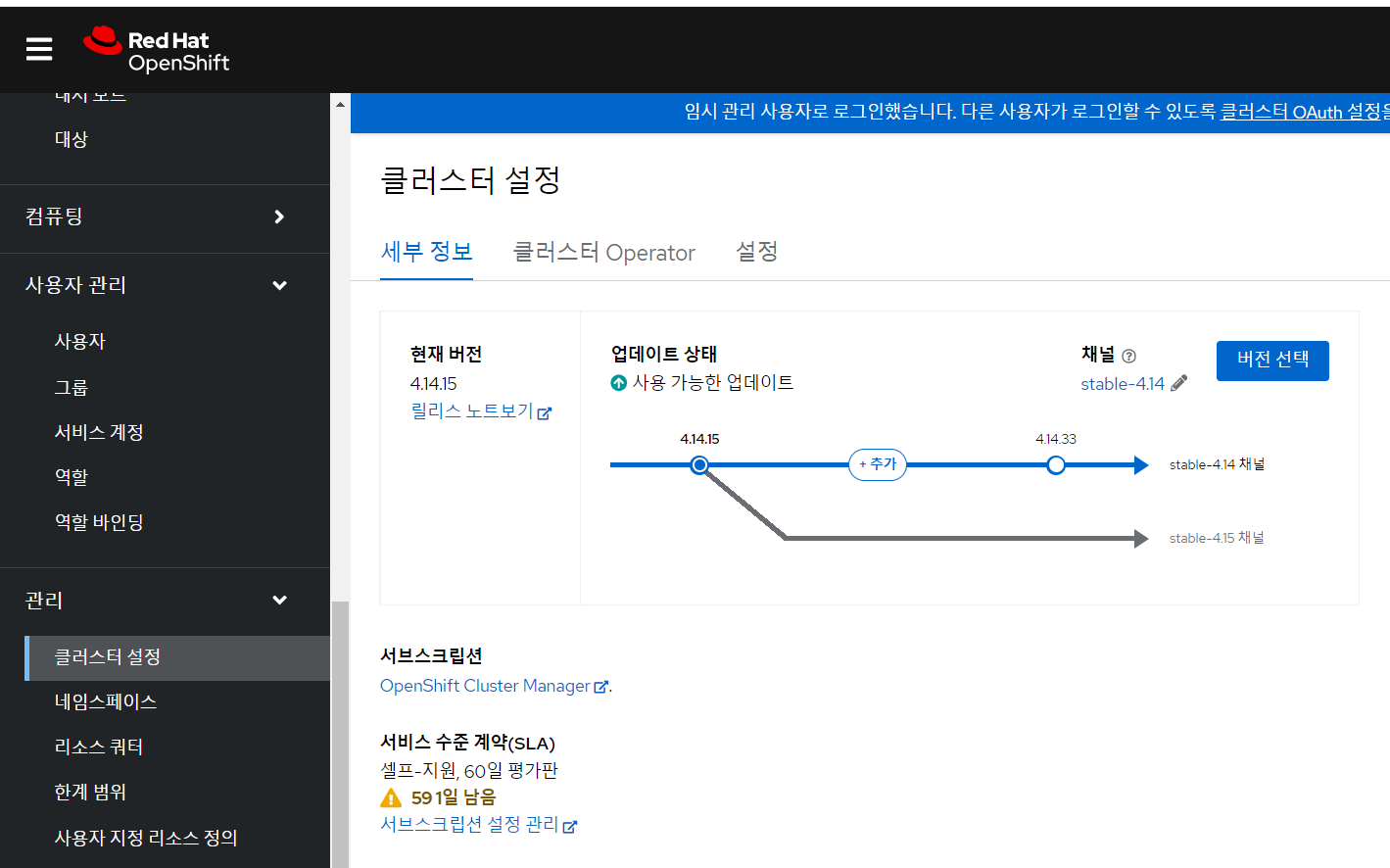

1.8. 최종 확인 및 콘솔 접속

oc get co

console까지 완벽하게 뜬 것을 확인할 수 있다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

authentication 4.14.15 True False False 5m50s

baremetal 4.14.15 True False False 4h25m

cloud-controller-manager 4.14.15 True False False 4h29m

cloud-credential 4.14.15 True False False 5h7m

cluster-autoscaler 4.14.15 True False False 4h25m

config-operator 4.14.15 True False False 4h26m

console 4.14.15 True False False 3m14s

control-plane-machine-set 4.14.15 True False False 4h25m

csi-snapshot-controller 4.14.15 True False False 4h25m

dns 4.14.15 True False False 4h25m

etcd 4.14.15 True False False 4h24m

image-registry 4.14.15 True False False 16m

ingress 4.14.15 True False False 94m

insights 4.14.15 True False False 4h19m

kube-apiserver 4.14.15 True False False 4h22m

kube-controller-manager 4.14.15 True False False 4h22m

kube-scheduler 4.14.15 True False False 4h22m

kube-storage-version-migrator 4.14.15 True False False 4h26m

machine-api 4.14.15 True False False 4h25m

machine-approver 4.14.15 True False False 4h26m

machine-config 4.14.15 True False False 4h25m

marketplace 4.14.15 True False False 4h25m

monitoring 4.14.15 True False False 92m

network 4.14.15 True False False 4h26m

node-tuning 4.14.15 True False False 4h25m

openshift-apiserver 4.14.15 True False False 4h15m

openshift-controller-manager 4.14.15 True False False 4h19m

openshift-samples 4.14.15 True False False 4h19m

operator-lifecycle-manager 4.14.15 True False False 4h25m

operator-lifecycle-manager-catalog 4.14.15 True False False 4h25m

operator-lifecycle-manager-packageserver 4.14.15 True False False 4h20m

service-ca 4.14.15 True False False 4h26m

storage 4.14.15 True False False 4h26m

1

2

3

oc login -u [USERNAME] -p [PASSWORD] https://api.openshift.ig.local:6443

Login failed (401 Unauthorized)

Verify you have provided the correct credentials.

아래 명령어로 콘솔 계정(ID/PWD)을 확인할 수 있다.

openshift-install wait-for install-complete --dir=/var/www/html/ocp --log-level=debug

1

2

3

4

5

6

7

8

9

10

INFO Checking to see if there is a route at openshift-console/console...

DEBUG Route found in openshift-console namespace: console

DEBUG OpenShift console route is admitted

INFO Install complete!

INFO To access the cluster as the system:admin user when using 'oc', run 'export KUBECONFIG=/var/www/html/ocp/auth/kubeconfig'

INFO Access the OpenShift web-console here: https://console-openshift-console.apps.openshift.ig.local

INFO Login to the console with user: "kubeadmin", and password: "[PASSWORD]"

DEBUG Time elapsed per stage:

DEBUG Cluster Operators: 34m27s

INFO Time elapsed: 34m27s

user: “kubeadmin”, and password: “[PASSWORD]”

kubeadmin 계정의 비밀번호는 아래에서 추가로 확인 가능하다.

/var/www/html/ocp/auth/kubeadmin-password 파일

/var/www/html/ocp/.openshift_install.log 로그 끝 라인

cluster admin 계정을 추가로 생성하는 것이 필요하다. (kubeadmin 사용 권장 X)

https://console-openshift-console.apps.openshift.ig.local/

위 로그에 나온 username/password로 접속하면 콘솔에 로그인하여 다음과 같이 접속화면을 볼 수 있다.

임시 관리 사용자로 로그인했기 때문에 추후 해당 계정을 없애고 새로운 관리자 계정을 추가해줘야 한다.

References

https://docs.openshift.com/container-platform/4.14/web_console/web-console-overview.html

Comments powered by Disqus.